The Pitfalls of System.Random

When it comes to random number generators in .NET, there's two options: System.Random and the easy to say System.Security.Cryptography.RNGCryptoServiceProvider. The former is a pseudo random number generator (PRNG), while the latter is a cryptographically secure random number generator (CSRNG). Common wisdom is to use Random, since it's faster and friendlier than RNGCryptoServiceProvider while still providing random numbers that are good enough for casual use. Unfortunately, Random is not as random as you'd like, performance leaves a lot on the table, and the API isn't nearly as nice once you get to know it.

Distribution

Primarily, the Sample(int) and Sample(int, int) methods use floating point to scale the result within the range, which introduces a significant amount of bias to the lower bits. This becomes evident in a coin flip, where out of 1 million trials, 503291 were heads - something that only should occur 0.0000000046% of the time (calculated as a two-tailed normal CDF). With 10 million trials, I get a Z-score of a whopping -23.3. Punching it into my handy-dandy calculator, that occurs at a probability of... 0. It's essentially impossible for a fair coin flip.

In addition, Donald Knuth's algorithm used by Random was not implemented correctly. Due to an incorrect constant, the period likely differs from the intended value of 2^55 - 1.

The internal sampling forces any result of 2^31 - 1 to 2^31 - 2 instead:

if (retVal == MBIG) retVal--;

As a result 2^31 - 2 is twice as likely to occur as any other result. This slightly biases all samples taken through any of Random's methods.

Additionally, NextBytes does not account for the shortened range of the internal sampling. Since the range is not inclusive, 2^31 - 1 is never reached, meaning NextBytes is very slightly biased towards generating the values 0-254. While this could be mitigated by dropping the lowest bit, NextBytes does not do so.

Finally, due to the limited precision NextDouble only provides 31 bits of precision, as opposed to the 52 bits possible with a Double.

Performance

I wrote some quick and dirty performance benchmarks to test the common wisdom that Random was faster than RNGCryptoServiceProvider (CSP). The results were surprising:

- Sampling half the range of Int32,

Randomis 28% faster thanCSP. - Sampling the full range of Int32,

Randomis 89% slower thanCSP. NextBytesis incredibly slow -Randomis 22 times slower thanCSP.

Since InternalSample only returns 31 bits of entropy, it needs to be called twice to be able to sample the full range of an Int32, hence the full range taking a little more than twice as long as half the range. NextBytes, however has the opposite problem - it has more than enough to fill 3 full bytes, yet only fills one, throwing away almost 75% of the sampled bits:

public virtual void NextBytes(Span<byte> buffer)

{

for (int i = 0; i < buffer.Length; i++)

{

buffer[i] = (byte)Next();

}

}

The only case where Random performs better than CSP ends up being a half range sample - but even then, it's only 28% faster. If performance is a true concern, other PRNGs are much faster than Random, such as the PCG or xoshiro families.

API Design

While seedable, it's not reproducible - the documentation states that the algorithm used can change at any time. This is rather confusing, since it isn't immediately obvious to users that this is the case, as the information is buried at the bottom of the docs. As a result, a lot of existing code assumes that the same seed will produce the same sequence of numbers forver. Changing the implementation would therefore be an iffy breaking change. Nevertheless, Random should not be relied on to be reproducible, as the underlying implementation may still change at some point.

The exclusive bound of Next(maxValue) means that Int32.MaxValue will never be returned from any method. This makes it impossible to sample the full range of Int32 without calling Next() twice and gluing together the results. Instead, a NextInclusive(maxValue) method should exist to allow the full 2^32 range to be sampled, as well as a method to sample a 64-bit range.

Before .NET Core 2.0, the default seed of Random was simply time-based. Correct usage would involve either one shared instance (but not across threads!) or generating and providing a unique seed. More commonly, however, those unfamiliar with Random often ended up creating new instances in a tight loop. As the resulting seeds were identical, the supposedly random numbers ended up looking rather similar. Thankfully, this has been fixed in .NET Core 2.0.

If that hasn't deterred you (perhaps you have code that expects an instance of Random), you may be inclined to inherit from Random and at least use a different algorithm - this could fix the distribution and performance issues at least. Looking at the members to override, Sample looks like the appropriate method to override, and the other methods wouldn't need to be touched. While this was the case before .NET Framework 2.0, since then you need to override 3 other methods as well. Essentially, you'll end up just rewriting the entire class. At this point, Random would be better off being an interface instead of a class.

Solutions

The biggest problems with Random can be fixed by simply changing the underlying algorithm to one that's faster and produces higher quality random numbers such as PCG. But until that happens, the next best thing using an alternative algorithm through inheritance. In fact, Math.NET offers drop-in replacements for System.Random, plus some extension methods for full range Int32s and Int64s.

Personally, I'm not entirely satisfied with this. I believe that Random's API is fundamentally flawed and needs to be replaced entirely. Therefore, I'm in the process of creating RandN to provide an easy to use and flexible API with clear extension points. The design of RandN is heavily inspired by the Rand crate for Rust, with emphasis placed on reproducible RNGs and stable distributions. It's coming along nicely, with mostly just polishing up and quality-of-life improvements before an initial release.

Changelog

- 2020-05-28

- Clarified statistic calculation.

- 2020-05-27

- Link to GitHub gist demonstrating biased random number generation.

- 2020-05-24

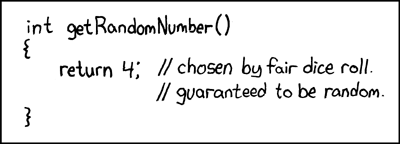

- Embedded xkcd, addded information to the last paragraph.